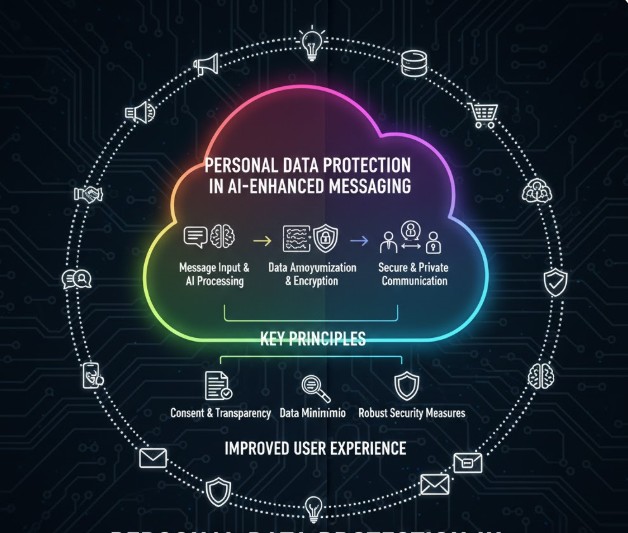

In today’s hyperconnected digital environment, Personal Data Protection in AI-Enhanced Messaging has become a critical priority for both organizations and users. As messaging platforms increasingly integrate artificial intelligence to deliver smarter, faster, and more context-aware interactions, ensuring the protection of personal data is essential to maintain trust and prevent misuse. This article explores how AI-powered messaging systems can enhance functionality without compromising privacy or security.

1. Why Data Protection Matters in AI-Driven Messaging

AI-enhanced messaging systems process sensitive user information—from personal identifiers to behavioral patterns. Without strong privacy safeguards, this data could be exposed, misinterpreted, or used in unintended ways.

Users expect personalization, but they also expect safety. Balancing these two expectations is at the heart of modern privacy strategies.

2. How AI Uses Data Responsibly

AI-driven messaging relies on patterns, context, and historical interactions to offer accurate suggestions and automation. However, responsible design ensures AI models only access what is necessary, avoid over-collection, and do not store more information than required.

Techniques such as anonymization and tokenization help ensure raw personal data is not exposed.

3. Consent and Transparency: The Building Blocks of Trust

Clear communication about what data is collected and how it is used strengthens user trust. When users understand how AI-enhanced messaging benefits them—such as faster responses, better recommendations, or more intuitive support—they are more likely to feel comfortable sharing information.

Consent-based systems offer users control over their preferences and data-sharing boundaries.

4. Minimizing Data Exposure Through Strong Security Practices

Security plays a central role in Personal Data Protection in AI-Enhanced Messaging. Techniques like encryption, secure authentication, and data segregation prevent unauthorized access.

Modern systems increasingly adopt principles such as “security by design,” ensuring privacy protection is embedded from the start rather than added later.

5. Reducing Bias and Preventing Misuse

AI models trained on biased or irrelevant data can unintentionally produce unfair or inaccurate outputs. Protecting personal data includes ensuring that datasets are responsibly curated, ethically sourced, and free from harmful patterns.

This approach ensures the system remains safe, neutral, and trustworthy for all users.

6. The Role of Data Minimization

Data minimization ensures that systems collect only what is essential for a specific function. This reduces risk, simplifies compliance, and improves transparency.

In AI-enhanced messaging, minimization helps ensure that personalization does not become intrusive.

7. Building Trust Through Ethical AI Principles

Beyond technical safeguards, trustworthy AI relies on ethical practices—fairness, transparency, accountability, and respect for user autonomy. These principles reinforce long-term trust and ensure that AI serves users responsibly.

Conclusion

Personal Data Protection in AI-Enhanced Messaging is a foundational requirement for building secure, intelligent, and user-centered communication tools. As AI continues to shape the future of messaging, organizations must commit to responsible data practices that protect users while still delivering the efficiency and personalization they expect. Strong privacy safeguards not only reduce risk but also create systems that people feel confident using every day.